(单词翻译:单击)

Leaders

来源于《社论》版块

Fake nudes

虚假裸体

Sex, lies and politics

性、谎言和政治

As deepfake technology spreads, expect more bogus sex tapes of female politicians

随着deepfake技术的传播,可以预料会有更多女性政客的虚假性爱录像

Adulterer, pervert, traitor, murderer. In France in 1793, no woman was more relentlessly slandered than Marie Antoinette. Political pamphlets spread baseless rumours of her depravity. Some drawings showed her with multiple lovers, male and female. Others portrayed her as a harpy, a notoriously disagreeable mythical beast that was half bird-of-prey, half woman. Such mudslinging served a political purpose. The revolutionaries who had overthrown the monarchy wanted to tarnish the former queen’s reputation before they cut off her head.

奸夫、变态、叛徒、杀人犯。1793年,在法国没有哪个女人比玛丽亚·安东尼特更受到无情的诽谤。政治小册子散布有关她堕落的毫无根据的谣言。一些画显示了她与多个情人在一起,有男有女。另一些人把她描绘成一个大雕,一个臭名昭著的令人讨厌的神话野兽,一半是猛禽,一半是女人。这种诽谤达到了政治目的。推翻君主制的革命者们想在砍下前女王的头之前败坏她的名声。

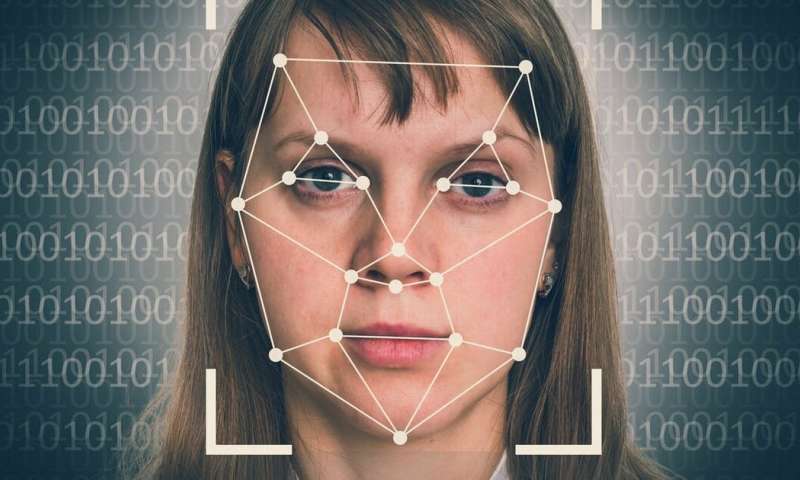

She was a victim of something ancient and nasty that is becoming worryingly common: sexualised disinformation to undercut women in public life. People have always invented rumours about such women. But three things have changed. Digital technology makes it easy to disseminate libel widely and anonymously. “Deepfake” techniques (manipulating images and video using artificial intelligence) make it cheap and simple to create convincing visual evidence that people have done or said things which they have not. And powerful actors, including governments and ruling parties, have gleefully exploited these new opportunities. A report by researchers at Oxford this year found well-organised disinformation campaigns in 70 countries, up from 48 in 2018 and 28 in 2017.

她是某种古老而肮脏的东西的受害者,这种东西正变得越来越普遍,令人担忧:性别化的虚假信息,以削弱女性在公共生活中的地位。人们总是捏造关于这些女人的谣言。但有三件事发生了变化。数字技术使广泛和匿名传播诽谤变得容易。“Deepfake”技术(使用人工智能操纵图像和视频)使得制作令人信服的视觉证据变得廉价和简单,用来证明人们做过或说过他们没有做过或说过的事情。而包括政府和执政党在内的有权势的行动者则乐喜出望外地利用了这些新机会。牛津大学研究人员今年发布的一份报告发现,70个国家开展了组织良好的虚假信息宣传活动,高于2018年的48个国家和2017年的28个国家。

Consider the case of Rana Ayyub, an Indian journalist who tirelessly reports on corruption, and who wrote a book about the massacre of Muslims in the state of Gujarat when Narendra Modi, now India’s prime minister, was in charge there. For years, critics muttered that she was unpatriotic (because she is a Muslim who criticises the ruling party) and a prostitute (because she is a woman). In April 2018 the abuse intensified. A deepfake sex video, which grafted her face over that of another woman, was published and went viral. Digital mobs threatened to rape or kill her. She was “doxxed”: someone published her home address and phone number online. It is hard to prove who was behind this campaign of intimidation, but its purpose is obvious: to silence her, and any other woman thinking of criticising the mighty.

以印度记者拉娜•阿尤布为例,她不知疲倦地报道腐败,还写了一本关于纳伦德拉•莫迪担任古吉拉特邦总理期间发生的穆斯林大屠杀的书。多年来,批评者一直抱怨她不爱国(因为她是批评执政党的穆斯林),还说她是妓女(因为她是女人)。2018年4月,辱骂加剧。一段利用换脸神器deepfake制作的性爱视频将她的脸移植到另一名女性的脸上,并在网上疯传。数字暴民威胁要强奸或杀害她。她被人肉搜索:有人在网上公布了她的家庭住址和电话号码。很难证明这场恐吓运动的幕后黑手是谁,但其目的显而易见:让她和其他任何想要批评当权者的女性保持沉默。

译文由可可原创,仅供学习交流使用,未经许可请勿转载。