(单词翻译:单击)

Science and technology

科学和技术

Computer vision

计算机模拟视觉

Eye robot

你是我的眼

Poor eyesight remains one of the main obstacles to letting robots loose among humans.

放手让机器人在人类社会自由活动仍存在重大障碍—它们看不清楚。

But it is improving, in part by aping natural vision.

然而人工视能正在逐渐提高,途径之一就是模拟自然视觉。

ROBOTS are getting smarter and more agile all the time.

机器人的反应总是在变得越来越灵活,动作也越来越敏捷。

They disarm bombs, fly combat missions, put together complicated machines, even play football.

它们会拆卸炸弹、驾驶战斗机执行任务、组装复杂机械,甚至还会踢足球。

Why, then, one might ask, are they nowhere to be seen, beyond war zones, factories and technology fairs?

那么,人们不禁要问,为什么除了在战场、工厂和科技产品展销会,生活中都看不到机器人的踪影呢?

One reason is that they themselves cannot see very well.

一个原因就是它们自己眼神不大好。

And people are understandably wary of purblind contraptions bumping into them willy-nilly in the street or at home.

机器人跟睁眼瞎差不多,要是把它们弄到大街上,或者摆在家里,搞不好就没头没脑地把人给撞了——对这玩意儿谨慎一点也是人之常情。

All that a camera-equipped computer sees is lots of picture elements, or pixels.

装有摄像头的计算机能看到的一切,仅仅是大量的图像元素,又称像素。

A pixel is merely a number reflecting how much light has hit a particular part of a sensor.

像素只不过是一个数值,反映照到传感器某个部位的光线亮度是多少。

The challenge has been to devise algorithms that can interpret such numbers as scenes composed of different objects in space.

困难在于,要编写出一套计算程序,可以把这些数字再现为空间中不同物体构成的景象。

This comes naturally to people and, barring certain optical illusions, takes no time at all as well as precious little conscious effort.

这一切对于人类来说,只是一种本能—除非出现某些错觉—立杆而见影,在意识上可谓不费吹灰之力。

Yet emulating this feat in computers has proved tough.

然而事实证明,在计算机上模拟人的这一天赋实非易事。

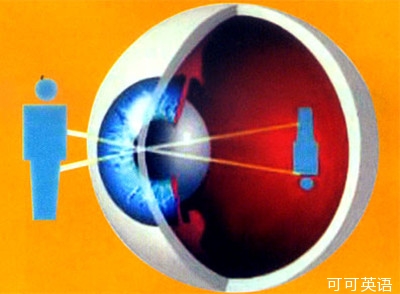

In natural vision, after an image is formed in the retina it is sent to an area at the back of the brain, called the visual cortex, for processing.

自然视觉的过程是:视网膜成像后,图像被传送到大脑后部叫做视觉皮层的地方,在那里进行信息处理。

The first nerve cells it passes through react only to simple stimuli, such as edges slanting at particular angles.

图像经过的第一组神经元只能对简单的视觉刺激作出反射,例如物体朝某些角度倾斜的边缘。

They fire up other cells, further into the visual cortex, which react to simple combinations of edges, such as corners.

第一组神经元又将兴奋传给视觉皮层更深处的神经元,这些神经细胞可以对简单的物体轮廓作出反应,例如空间中的角落。

Cells in each subsequent area discern ever more complex features, with those at the top of the hierarchy responding to general categories like animals and faces, and to entire scenes comprising assorted objects.

越往后,神经元能识别的图像特征越复杂,最高级别神经区域可以对动物和脸等总体类别作出反应,最后将包罗万象的场景整合到一起。

All this takes less than a tenth of a second.

而整个过程只需要不到十分之一秒。

The outline of this process has been known for years and in the late 1980s Yann LeCun, now at New York University, pioneered an approach to computer vision that tries to mimic the hierarchical way the visual cortex is wired.

很早以前,人们就已经了解这一过程的大致情形。二十世纪80年代末,现就职于纽约大学的雅安?勒存率先涉足计算机视觉研究,试图模拟人脑视觉皮层内神经元层层递进的布线方式。

He has been tweaking his convolutional neural networks ever since.

从那时起,他就一直在调整和改良他的卷积神经网络。

Seeing is believing

眼见为实

A ConvNet begins by swiping a number of software filters, each several pixels across, over the image, pixel by pixel.

卷积神经网络首先用几个软件滤光器,对图像逐一像素地进行扫描,每个滤光器只能通过几个像素。

Like the brain's primary visual cortex, these filters look for simple features such as edges.

就像大脑的初级视觉皮层,这些滤光器只负责收集物体边缘等简单图像特征。

The upshot is a set of feature maps, one for each filter, showing which patches of the original image contain the sought-after element.

结果得到一组特征图,每一张特征图对应一个滤光器,显示出原始图像中的哪些块包含要筛选到的要素。

A series of transformations is then performed on each map in order to enhance it and improve the contrast.

随后,每一张特征图都要进行一系列调整,以提高它的画质、改善它的明暗对比度。

Next, the maps are swiped again, but this time rather than stopping at each pixel, the filter takes a snapshot every few pixels.

接下来,对这些特征图再次进行扫描,但这一次,滤光器不会在像素上逐一停留,而是每几个像素快拍一次。

That produces a new set of maps of lower resolution.

这样,得到一组新的分辨率较低的特征图。

These highlight the salient features while reining in computing power.

这些步骤凸显图像最显著的特征,同时对计算资源进行严格控制。

The whole process is then repeated, with several hundred filters probing for more elaborate shapes rather than just a few scouring for simple ones.

然后,将整个过程重复一遍,用几百个滤光器探查更为精细的物体形状,而不是随便扫视一些简单的形状。

The resulting array of feature maps is run through one final set of filters.

由此得到的特征图阵列,被输送经过最后一组滤光器。

These classify objects into general categories, such as pedestrians or cars.

它们可以对物体进行大体归类—是行人还是汽车等等。

Many state-of-the-art computer-vision systems work along similar lines.

许多尖端计算机视觉模拟系统都采用类似的原理运行。

The uniqueness of ConvNets lies in where they get their filters.

卷积神经网络的独特之处在于它们的滤光器已经做得登峰造极。

Traditionally, these were simply plugged in one by one, in a laborious manual process that required an expert human eye to tell the machine what features to look for, in future, at each level.

以往,滤光器只是一个接一个地接通。这一过程由手工完成,极为繁琐,需要一名专家全程用肉眼观察,然后向机器下达指令,告诉它下一步检索什么样的特征。

That made systems which relied on them good at spotting narrow classes of objects but inept at discerning anything else.

于是,依靠手动操作滤光器的计算机视觉系统,可以识别的物体类别十分有限,而无法分辨其他更多的东西。

Dr LeCun's artificial visual cortex, by contrast, lights on the appropriate filters automatically as it is taught to distinguish the different types of object.

相比之下,勒存博士的人工视觉皮层,可以在按照设定程序识别不同类型的物体时,自动接通相应的滤光器。

When an image is fed into the unprimed system and processed, the chances are it will not, at first, be assigned to the right category.

把一张图像输入他的系统进行处理,如果这个系统没有预先存储任何资料,第一次使用时体统有可能会把这张图像错误归类。

But, shown the correct answer, the system can work its way back, modifying its own parameters so that the next time it sees a similar image it will respond appropriately.

但是,告诉它正确答案之后,系统将重新识别图像,并修改自身的参数,以便下一次再看到类似的图像,可以做出恰当的回应。

After enough trial runs, typically 10,000 or more, it makes a decent fist of recognising that class of objects in unlabelled images.

经过足够的试运行之后——通常需要进行1万次以上——要在未经标示的图像上识别那一类物体,卷积神经网络可以完成得相当出色。

This still requires human input, though.

然而,这个阶段还是需要人类对其进行信息输入。

The next stage is unsupervised learning, in which instruction is entirely absent.

下一个阶段为无监督学习,在这个过程中没有任何人的指导。

Instead, the system is shown lots of pictures without being told what they depict.

是的,向勒存的计算机视觉系统展示大量图片,不告诉系统图上画的是什么。

It knows it is on to a promising filter when the output image resembles the input.

如果输出的图像和输入的图像几乎一样,系统就知道自身的滤光器升级了。

In a computing sense, resemblance is gauged by the extent to which the input image can be recreated from the lower-resolution output.

在计算机学上,两张图片是否相像的判断标准是,像素较低的输出图像可以在多大程度上复原为输入的图像。

When it can, the filters the system had used to get there are retained.

一旦可以还原,为系统所用而达到这种效果的滤光器就被保留下来。

In a tribute to nature's nous, the lowest-level filters arrived at in this unaided process are edge-seeking ones, just as in the brain.

在这个体现自然界智能的过程中,在无人辅助阶段,滤光器达到的最初等级为物体边缘搜索,正如人脑中的情形一样。

The top-level filters are sensitive to all manner of complex shapes.

最高等级的滤光器各种光怪陆离的形状都十分敏感。

Caltech-101, a database routinely used for vision research, consists of some 10,000 standardised images of 101 types of just such complex shapes, including faces, cars and watches.

加州理工101是进行视觉研究常规使用的数据库,它存储了约1万幅标准化图像,描述101类和标准化图像复杂程度相当的物体形状,包括脸、汽车和手表等。

When a ConvNet with unsupervised pre-training is shown the images from this database it can learn to recognise the categories more than 70% of the time.

当给事先经过无人监督训练的卷积神经网络展示这个数据库中的图像时,它可以通过学习辨认图像的类别,成功几率超过70%。

This is just below what top-scoring hand-engineered systems are capable of—and those tend to be much slower.

而最先进的手动视觉系统可以做到的也只比这个高一点点—并且它们的辨认速度往往慢得多。

This approach which Geoffrey Hinton of the University of Toronto, a doyen of the field, has dubbed deep learning need not be confined to computer-vision.

勒存的方法多伦多大学的杰弗里?希尔顿是该领域的泰斗,他将这一方法命名为深度学习不一定局限于计算机视觉领域。

In theory, it ought to work for any hierarchical system:language processing, for example.

理论上,该方法还可以用在任何等级系统当中,譬如语言处理。

In that case individual sounds would be low-level features akin to edges, whereas the meanings of conversations would correspond to elaborate scenes.

在这种情况下,音素就是语言识别的初级特征,相当于模拟视觉中的物体边缘,而对话的含义则相当于复杂场景。

For now, though, ConvNet has proved its mettle in the visual domain.

然而,目前卷积神经网络已经在视觉领域大显神威。

Google has been using it to blot out faces and licence plates in its Streetview application.

谷歌一直在街道实景应用程序中使用该系统,识别人脸和车牌,对其进行模糊处理。

It has also come to the attention of DARPA, the research arm of America's Defence Department.

它还引起了美国国防部高等研究计划局的注意。

This agency provided Dr LeCun and his team with a small roving robot which, equipped with their system, learned to detect large obstacles from afar and correct its path accordingly—a problem that lesser machines often, as it were, trip over.

他们为勒存博士和他的团队提供了一个漫游机器人,给它装上卷积神经网络系统后,这个机器人学会了探测远处的大型障碍物,并相应地纠正行进路线—可以说,没有安装该系统的机器人通常都会在这个问题上绊住。

The scooter-sized robot was also rather good at not running into the researchers.

这个漫游机器人只有小孩玩的滑板车那么大,却还相当有眼色:它不会撞向研究人员。

In a selfless act of scientific bravery, they strode confidently in front of it as it rode towards them at a brisk walking pace, only to see it stop in its tracks and reverse.

研究人员们发扬科学家舍身忘我的大无畏精神,做了一个实验:当机器人步履轻盈地向他们开过来时,他们突然昂首阔步迎面冲向机器人。结果发现,机器人半路停下并转向。

Such machines may not quite yet be ready to walk the streets alongside people, but the day they can is surely not far off.

当然,这类机器人要走上街头与人为伍,或许还略欠火候。但是,它们可以自由行走那一天想必已经不远了。