(单词翻译:单击)

"This is all a conspiracy, don't you know that, it's a conspiracy."

“整个就是一阴谋,你不知道吗,这是阴谋。”

"Yes, yes, yes!"

“是的,是的,没错!”

"Good evening, my fellow Americans.

“晚上好,我的同胞们。

Fate has ordained that the men who went to the moon to explore in peace will stay on the moon to rest in peace."

命运注定,和平前往月球探索的宇航员们终将留在月球上,直到他们安息。”

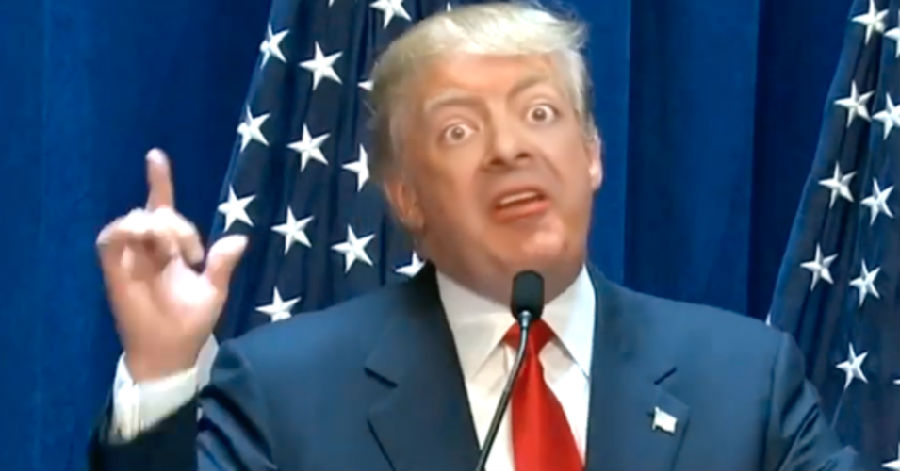

That President Nixon video you just watched is a deep fake.

你刚看的那个尼克松总统讲话的视频其实是个深度造假(也即人们常说的“换脸”)视频。

It was created by a team at MIT as an educational tool

视频是麻省理工某团队制作的一段教学材料,

to highlight how manipulated videos can spread misinformation - and even rewrite history.

为的是展示被篡改的视频传播错误信息-乃至改写历史的潜力有多大。

Deepfakes have become a new form of altering reality, and they're spreading fast.

深度造假技术已经成为传播非常迅速的一种改变现实的新手段。

The good ones can chip away at our ability to discern fact from fiction, testing whether seeing is really believing.

逼真的深度造假视频足以击垮我们辨别是非真假的能力,考验我们“眼见为实”的信念。

Some have playful intentions, while others can cause serious harm.

部分深度造假视频是为取乐,其他的则可能造成严重的伤害。

"People have had high profile examples that they put out that have been very good,

“人们已经举了一些非常好,非常著名的例子,

and I think that moved the discussion forward both in terms of, wow, this is what's possible with this given enough time and resources,

而且在我看来,他们的这一举动不仅推动了有关‘哇,在足够的时间和资源的条件下,这种技术竟能有这样的未来’这一问题的讨论,

and can we actually tell at some point in time, whether things are real or not?

也推动了有关‘在某个时间点,我们是否真的能判定某些事情究竟是真是假’这一问题的讨论。

A deep fake doesn't have to be a complete picture of something.

深度造假视频不一定需要某个人的全貌。

It can be a small part that's just enough to really change the message of the medium."

也可以是一个很小的,刚好能够扭曲被换脸人传达的信息的局部。”

"See, I would never say these things, at least not in the public address.

“我跟你们说,我绝不会说这样的话,至少在公开场合不会。

But someone else would.

但有人会。

Someone like Jordan Peele."

比如乔丹·皮尔。”

A deep fake is a video or an audio clip that's been altered to change the content using deep learning models.

深度造假视频就是利用各种深度学习模型剪辑,修改内容后的视频或音频片段。

The deep part of the deep fake that you might be accustomed to seeing often relies on a specific machine learning tool.

深度造假视频——你可能都已经见怪不怪了——的“深”往往依赖的是一种特定的机器学习工具。

"A GAN is a generative adversarial network and it's a kind of machine learning technique.

“GAN即生成式对抗网络,这是一种机器学习技术。

So in the case of deep fake generation, you have one system that's trying to create a face, for example.

制作深度造假视频有专门生成面部数据的模块。

And then you have an adversary that is designed to detect deep fakes.

还有一种专门检测深度造假面部数据的对抗模块。

And you use these two together to help this first one become very successful

让这两个模块互相博弈,第一个系统就能成功制作出

at generating faces that are very hard to detect.

很难被(其他机器学习技术)检测到的面部数据了。

And they just go back and forth.

就这么倒来倒去地用就可以了。

And the better the adversary, the better the producer will be."

而且,对抗系统做的越好,出来的造假视频就越逼真。”

One of the reasons why GANs have become a go-to tool for deep fake creators is because of the data revolution that we're living in.

那些GAN之所以成为深度造假视频制作圈的首选工具,原因之一在于我们已经身处数据革命时代。

"Deep learning has been around a long time, neural networks were around in the '90s and they disappeared.

“深度学习这一概念已经存在很长时间了,神经网络90年代就出现了,只不过后来消失了。

And what happened was the internet.

接下来就有了互联网。

The internet is providing enormous amounts of data for people to be able to train these things with armies of people giving annotations.

互联网正在产生海量的数据,以便人们能够通过大量的人工注释训练这些工具。

That allowed these neural networks that really were starved for data in the '90s, to come to their full potential."

这样一来,90年代没有数据可用的这些神经网络终于能够大展拳脚了。”

While this deep learning technology improves everyday, it's still not perfect.

然而,尽管这种深度学习技术每天都在进步,它依然不完美。

If you try to generate the entire thing, it looks like a video game.

如果你试着用她生成整个图像,就会给人一种电子游戏的画面的感觉。

Much worse than a video game in many ways.

而且很多地方还远不如电子游戏的画面。

And so people have focused on just changing very specific things like a very small part of a face

于是,人们就把重点放在了只改动某些个别地方,比如面部的某一小块,

to make it kind of resemble a celebrity in a still image, or being able to do that and allow it to go for a few frames in a video."

让造假人物贴近某个名人的静态图像,或者让这种效果在视频中维持几帧时间。”

Deep fakes first started to pop up in 2017, after a reddit user posted videos showing famous actresses in porn.

深度造假视频首次出现的时间是2017年,当时,reddit上的一名用户上传了一些著名女演员出现在小黄片里的视频。

Today, these videos still predominantly target women,

如今,这些视频针对的大多仍然是女性,

but have widened the net to include politicians saying and doing things that haven't happened.

但已经不限于女性了,还有政客说他们从未说过的话,做他们从未做过的事的视频。

"It's a future danger.

“这个技术未来势必成为一大危害。

And a lot of the groups that we work with are really focused on future dangers and potential dangers and being abreast of that."

我们合作的许多组织都非常关心这项技术未来的危害,其潜在的危害,如何跟进它们的动态。”

One of these interested groups has been DARPA.

其中一个利益集团就是国防部高级研究计划局(DARPA),

They sent out a call to researchers about a program called Media Forensics, also known as MediFor.

他们呼吁研究者们加入一个名为“媒体取证”(缩写“MediFor”)的项目。

"It's a DARPA project that's geared towards the analysis of media.

“这是DARPA发起的一个媒体分析项目。

And originally it started off as very much focused on still imagery, and detecting, did someone insert something into this image?

起初,它重点关注的是静止图像,检测‘是否有人在图像中插入什么元素’,

Remove something?

‘是否有人删掉了什么元素’等问题。

It was before deep fakes became prominent.

那时候,深度造假视频还没有很猖獗。

The project focus changed when this emerged."

之后,他们便对研究重点做了调整。”