(单词翻译:单击)

听力文本

Research Aims to Give Robots Human-Like Social Skills

One argument for why robots will never fully measure up to people is because they lack human-like social skills.

But researchers are experimenting with new methods to give robots social skills to better interact with humans. Two new studies provide evidence of progress in this kind of research.

One experiment was carried out by researchers from the Massachusetts Institute of Technology, MIT. The team developed a machine learning system for self-driving vehicles that is designed to learn the social characteristics of other drivers.

The researchers studied driving situations to learn how other drivers on the road were likely to behave. Since not all human drivers act the same way, the data was meant to teach the driverless car to avoid dangerous situations.

The researchers say the technology uses tools borrowed from the field of social psychology. In this experiment, scientists created a system that attempted to decide whether a person's driving style is more selfish or selfless. In road tests, self-driving vehicles equipped with the system improved their ability to predict what other drivers would do by up to 25 percent.

In one test, the self-driving car was observed making a left-hand turn. The study found the system could cause the vehicle to wait before making the turn if it predicted the oncoming drivers acted selfishly and might be unsafe. But when oncoming vehicles were judged to be selfless, the self-driving car could make the turn without delay because it saw less risk of unsafe behavior.

Wilko Schwarting is the lead writer of a report describing the research. He told MIT News that any robot working with or operating around humans needs to be able to effectively learn their intentions to better understand their behavior.

"People's tendencies to be collaborative or competitive often spill over into how they behave as drivers," Schwarting said. He added that the MIT experiments sought to understand whether a system could be trained to measure and predict such behaviors.

The system was designed to understand the right behaviors to use in different driving situations. For example, even the most selfless driver should know that quick and decisive action is sometimes needed to avoid danger, the researchers noted.

The MIT team plans to expand its research model to include other things that a self-driving vehicle might need to deal with. These include predictions about people walking around traffic, as well as bicycles and other things found in driving environments.

The researchers say they believe the technology could also be used in vehicles with human drivers. It could act as a warning system against other drivers judged to be behaving aggressively.

Another social experiment involved a game competition between humans and a robot. Researchers from Carnegie Mellon University tested whether a robot's "trash talk" would affect humans playing in a game against the machine. To "trash talk" is to talk about someone in a negative or insulting way usually to get them to make a mistake.

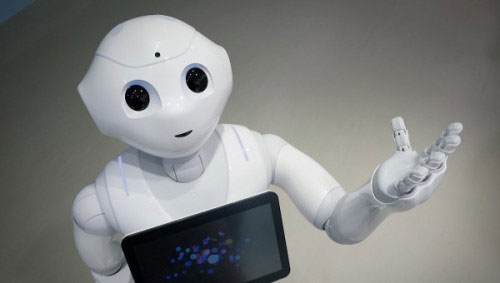

A humanoid robot, named Pepper, was programmed to say things to a human opponent like "I have to say you are a terrible player." Another robot statement was, "Over the course of the game, your playing has become confused."

The study involved each of the humans playing a game with the robot 35 different times. The game was called Guards and Treasures which is used to study decision making. The study found that players criticized by the robot generally performed worse in the games than humans receiving praise.

One of the lead researchers was Fei Fang, an assistant professor at Carnegie Mellon's Institute for Software Research. She said in a news release the study represents a departure from most human-robot experiments. "This is one of the first studies of human-robot interaction in an environment where they are not cooperating," Fang said.

The research suggests that humanoid robots have the ability to affect people socially just as humans do. Fang said this ability could become more important in the future when machines and humans are expected to interact regularly.

"We can expect home assistants to be cooperative," she said. "But in situations such as online shopping, they may not have the same goals as we do."

I'm Bryan Lynn.

重点解析

重点讲解:

1. measure up to 符合(标准);达到(期望);

It was fatiguing sometimes to try to measure up to her standard of perfection.

有时候,力求达到她尽善尽美的标准让人觉得很累。

2. interact with (人与计算机或计算机与其他机器之间)交互作用,互动;

Millions of people want new, simplified ways of interacting with a computer.

数以百万计的人们想要新的简化方式实现人机交互。

3. be likely to do sth. 可能(做…)的;有(…)倾向的;

This is likely to revive consumer spending and a whole raft of consumer industries.

这可能会带动消费性支出和一大批消费工业的复苏。

4. without delay 立即;毫不迟延地;

We'll send you a quote without delay.

我们会立刻送一份报价给你。

参考译文

研究希望让机器人具备类似人类的社交技能

为什么机器人永远不能完全达到人类一样的水平?其中一个理由是,因为机器人缺少类似人类的社交技能。

但研究人员正在测试新方法,赋予机器人社交技能,以更好地与人类互动。两项新研究提供了此类研究取得进展的证据。

其中一项试验由麻省理工学院(简称MIT)的研究人员进行。该团队开发了一种用于自动驾驶车辆的机器学习系统,旨在学习其他司机的社会特征。

研究人员研究了驾驶情况,以了解路上其他驾驶者可能有的行为方式。因为不是所有人类驾驶员的行为方式都一样,所以这些数据旨在教导无人驾驶车辆避免危险情况。

研究人员表示,这项技术使用了从社会心理学领域借来的工具。在这项实验中,科学家开发了一个系统,以试图确定一个人的驾驶风格是更为自私还是更加无私。在道路测试中,配备这一系统的自动驾驶车辆预测其他司机行为的能力提高了25%。

在一项测试中,研究人员观察到自动驾驶车辆向左转弯。这项研究发现,如果预测到迎面而来的驾驶员会做出自私而且不安全的行为,那该系统会让车辆在转弯之前等待。但是当迎面驶来的车辆被判定为无私时,那自动驾驶车辆会立刻转弯,因为其认为出现不安全行为的风险很小。

一篇报告介绍了这项研究,威尔科·施瓦廷是报告的主要作者。他对麻省理工学院新闻网表示,任何与人类合作或在人类周围工作的机器人都必须能有效地学习人类的意图,以更好地理解人类的行为。

施瓦廷说:“人类的合作或竞争倾向往往会渗透到他们的驾驶行为中。”他还表示,麻省理工学院的实验试图了解系统在经过训练后能否判断和预测这类行为。

这一系统旨在了解不同驾驶情况中应该采用的正确行为。研究人员指出,举例来说,即使是最自私的司机也应该知道,避免危险有时需要迅速且果断的行动。

麻省理工学院团队计划扩大其研究模型,以涵盖自动驾驶车辆可能需要应对的其他问题。这包括预测车辆周围的行人以及在驾驶环境中发现的自行车和其他东西。

研究人员表示,他们认为这项技术也能应用在人类司机驾驶的车辆上。它可以作为预警系统,以应对其他被判定为行为激进的驾驶员。

另一项社会实验涉及人与机器人之间的游戏竞赛。卡内基梅隆大学的研究人员测试了机器人的“垃圾话”是否会影响与机器玩游戏的人类。“垃圾话”是以消极或侮辱的方式谈论某人,通常是为了让他们犯错。

人形机器人“佩珀”被设计成会像人类对手说一些话,比如“我不得不说,你真是一个糟糕玩家”。机器人还会说:“在游戏过程中,你的表现变得很混乱。”

这项研究涵盖每个人在35个不同时间与机器人玩游戏的情况。该游戏名为《守卫与宝藏》,用于研究决策制定。研究发现,遭到机器人批评的玩家在游戏中的表现通常要比得到称赞的玩家差。

该研究的主导研究员之一是卡内基梅隆大学软件研究所的助理教授方菲(音译)。一篇新闻介绍了这项不同于大多数人类和机器人实验的研究,里面提到了方菲的看法。她说:“这是首批聚焦人类与机器人在非合作环境中进行互动的研究之一。”

研究表明,人形机器人有能力像人类那样在社交方面影响人类。方菲说,预计未来人类和机器人会经常互动,而届时这种能力就会变得更加重要。

她说:“我们可以期待家庭助手能和我们合作。但是在涉及网上购物等情形时,它们可能不会和我们有相同的目标。”

布莱恩·林恩报道。

译文为可可英语翻译,未经授权请勿转载!